Responsible AI is often discussed as if artificial intelligence is mainly a risk that needs to be controlled. In education, policy and organisations, the conversation usually starts from the same assumption: AI is dangerous, biased or unreliable, so we should be careful.

But this misses a key question:

Is it sometimes irresponsible not to use AI?

That question is rarely asked. And if we care about ethics, it should be central.

What current research on Responsible AI focuses on

This week I learned about recent research from Maaike Harbers, Marieke Peeters Francien Dechesne 🟥 M. Birna van Riemsdijk 🟥 and Pascal Wiggers into responsible use of AI in universities and universities of applied sciences. Nice piece of work.

It shows familiar patterns:

- AI ethics is addressed, but often in a fragmented way

- Integration across the curriculum is preferred over separate courses

- Content depends heavily on a few motivated lecturers

- Long-term institutional commitment is often missing

The recommendations make sense: better integration, shared resources, professional development, multidisciplinary perspectives, and clear governance.

But underneath many of these discussions lies an unspoken assumption:

AI is a problem first, and an opportunity second.

That framing deserves scrutiny.

Ethics is about making choices, not following rules

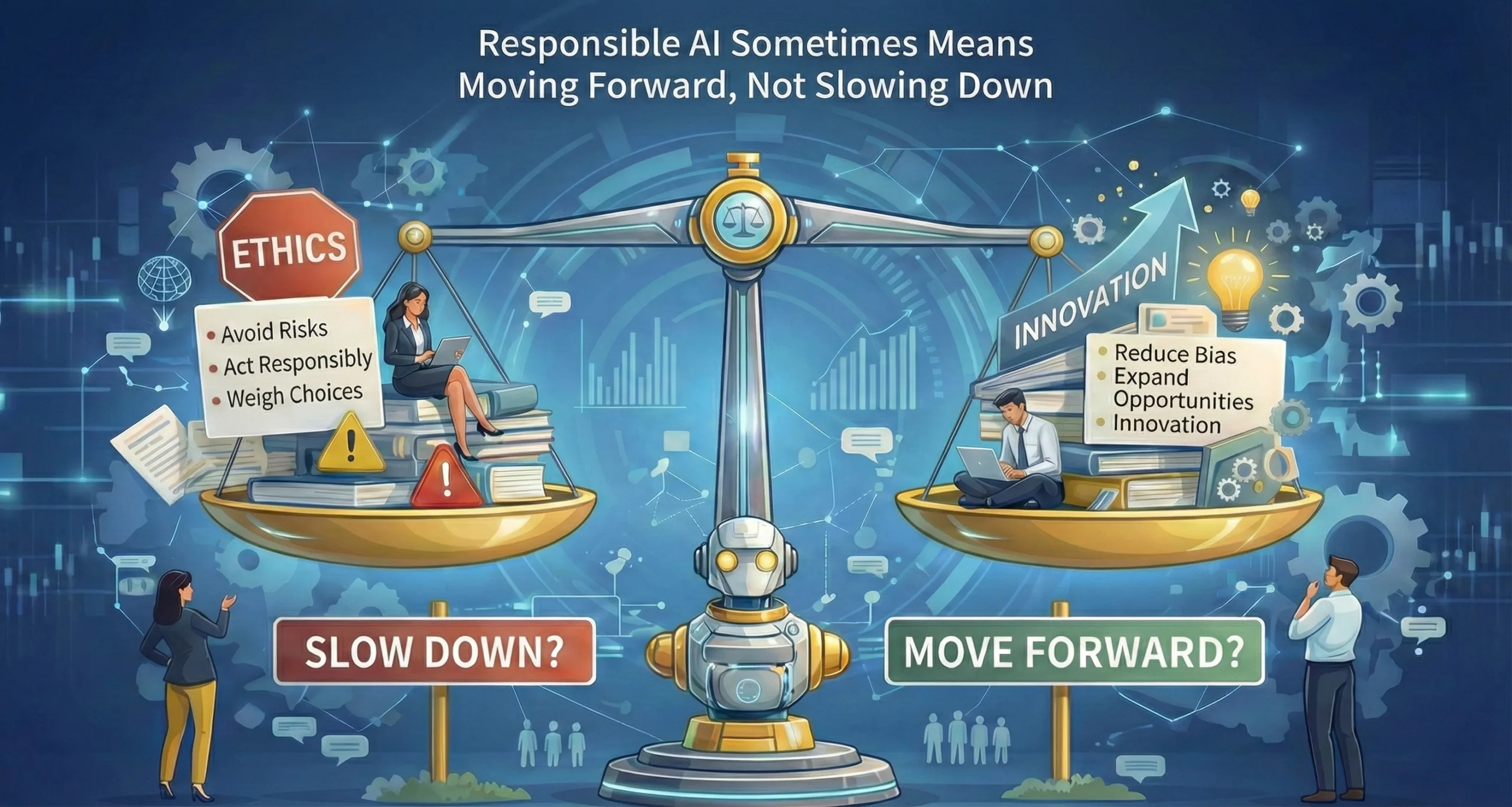

Ethics is not a checklist. It is not about applying fixed rules without thinking. Ethics is about weighing options, understanding context, and making choices you can explain and defend.

And sometimes, the responsible choice is to act.

Responsible choices do not automatically slow down innovation. In some cases, responsibility requires moving forward rather than holding back.

Humans are not neutral either

Many debates compare AI to an idealised version of human decision-making: fair, rational and unbiased. Reality looks very different.

We know from decades of research that humans regularly discriminate in hiring and selection. Often unconsciously. Often inconsistently. Often without being able to explain their decisions.

Well-designed AI systems, on the other hand:

- Make mistakes, but in a consistent way

- Can be tested, audited and improved

- Can be designed to ignore irrelevant personal characteristics

- Can focus on skills, experience and context rather than gut feeling

AI is not perfect. But pretending that humans are is not ethical either.

When using AI can be the responsible choice

A serious discussion about responsible AI asks different questions:

- What happens if we leave this decision to humans alone?

- What kinds of mistakes do they consistently make?

- Can careful use of AI reduce those mistakes?

- Is not acting also a choice, with real consequences?

In areas like work and employment, there is growing evidence that carefully designed matching systems (take a look at 8vance) can reduce bias and improve access to opportunities. Not despite technology, but because of it.

This does not require blind trust in AI. It requires evidence, transparency and continuous evaluation.

From risk avoidance to responsible action

For education, this means expanding how we teach responsible AI:

- Not only where AI can fail, but where humans fail as well

- Not only rules and compliance, but responsible decision-making in context

- Not only when to slow down, but when moving forward is justified

That demands research, real-world cases and honest debate. Including uncomfortable questions.

In conclusion

Responsible AI is not about being for or against technology. It is about making better, well-reasoned choices.

Sometimes caution is necessary. Sometimes inaction causes harm. And sometimes using AI responsibly is the most ethical option available.

That nuance deserves a central place in the conversation. Especially in education. Especially now.